By A Mystery Man Writer

Running BERT without Padding. Contribute to bytedance/effective_transformer development by creating an account on GitHub.

inference · GitHub Topics · GitHub

Full-Stack Optimizing Transformer Inference on ARM Many-Core CPU

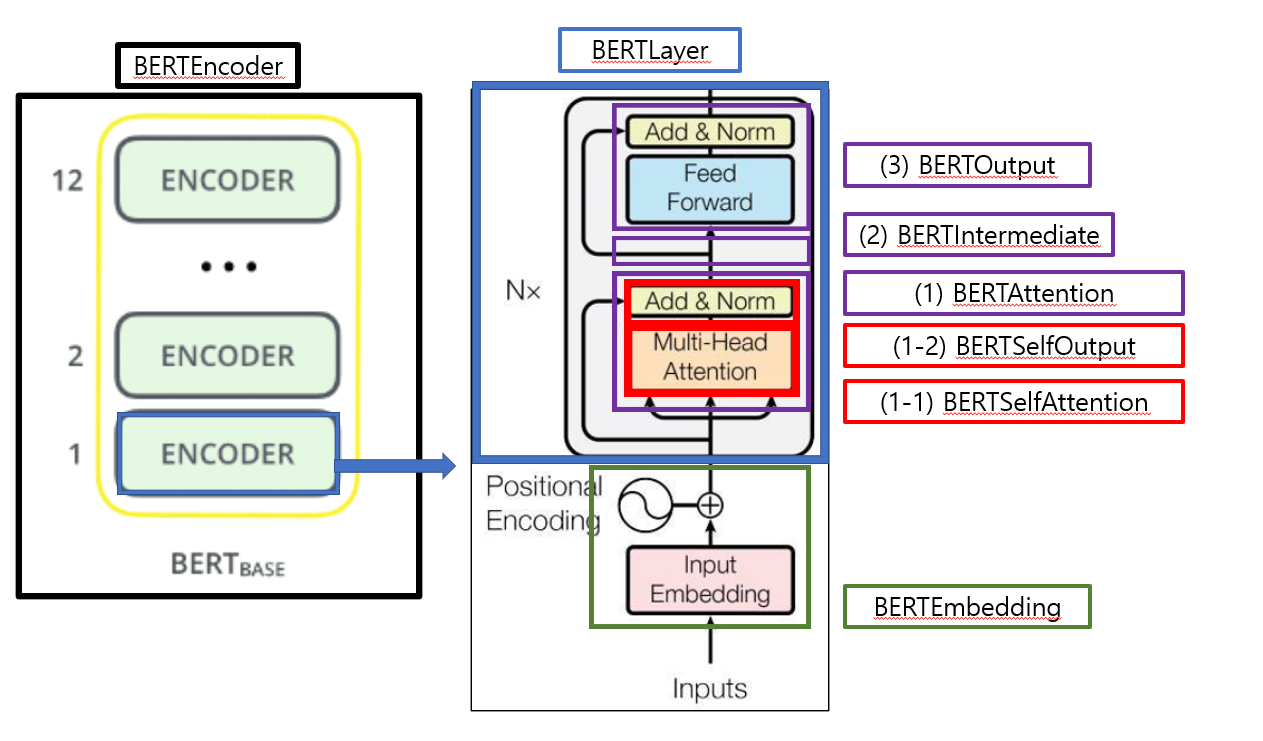

code review 1) BERT - AAA (All About AI)

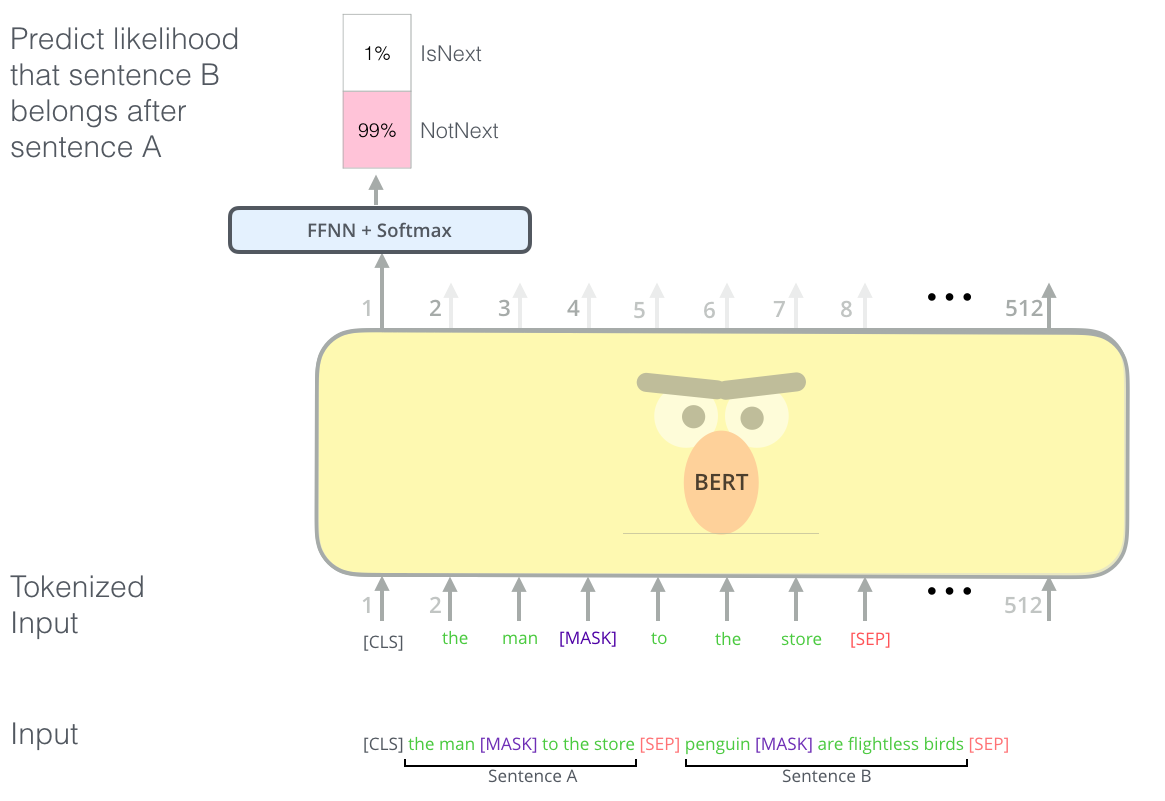

BERT (Bidirectional Encoder Representation From Transformers)

Aman's AI Journal • Papers List

GitHub - rickyHong/Google-BERT-repl

Loading fine_tuned BertModel fails due to prefix error · Issue #217 · huggingface/transformers · GitHub

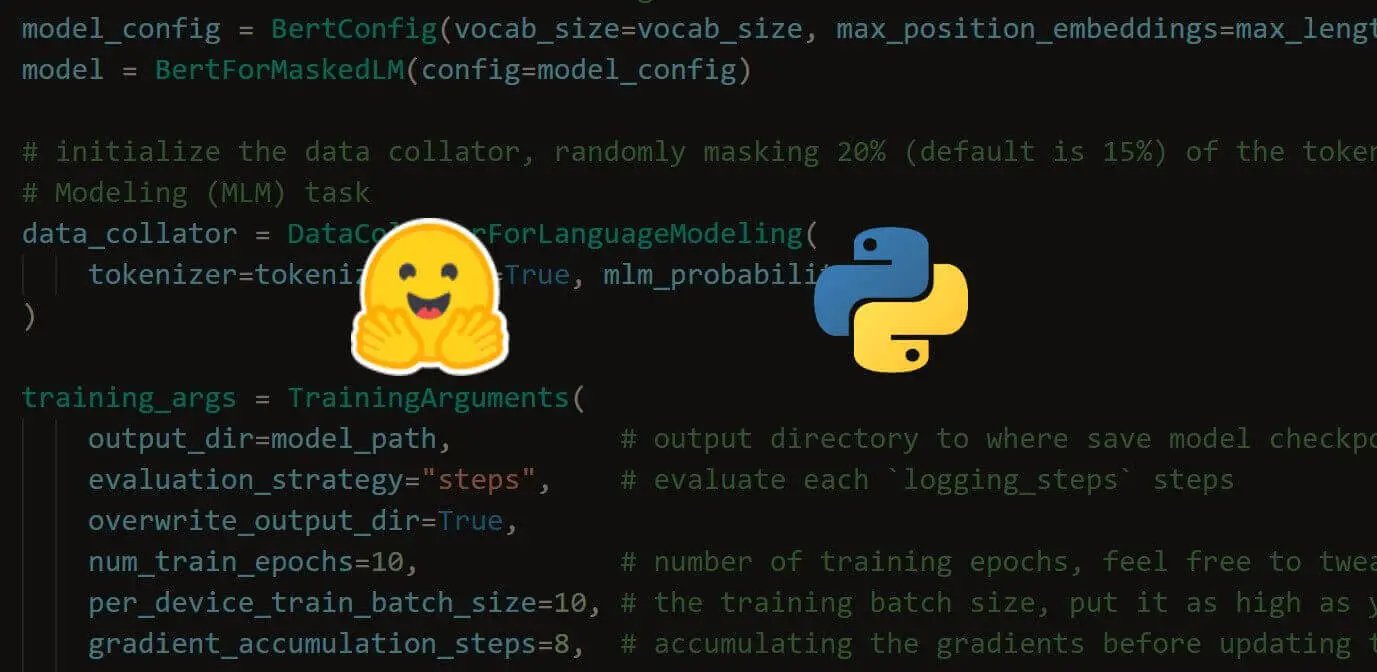

How to Train BERT from Scratch using Transformers in Python - The Python Code

unable to load the downloaded BERT model offline in local machine . could not find config.json and Error no file named ['pytorch_model.bin', 'tf_model.h5', 'model.ckpt.index']

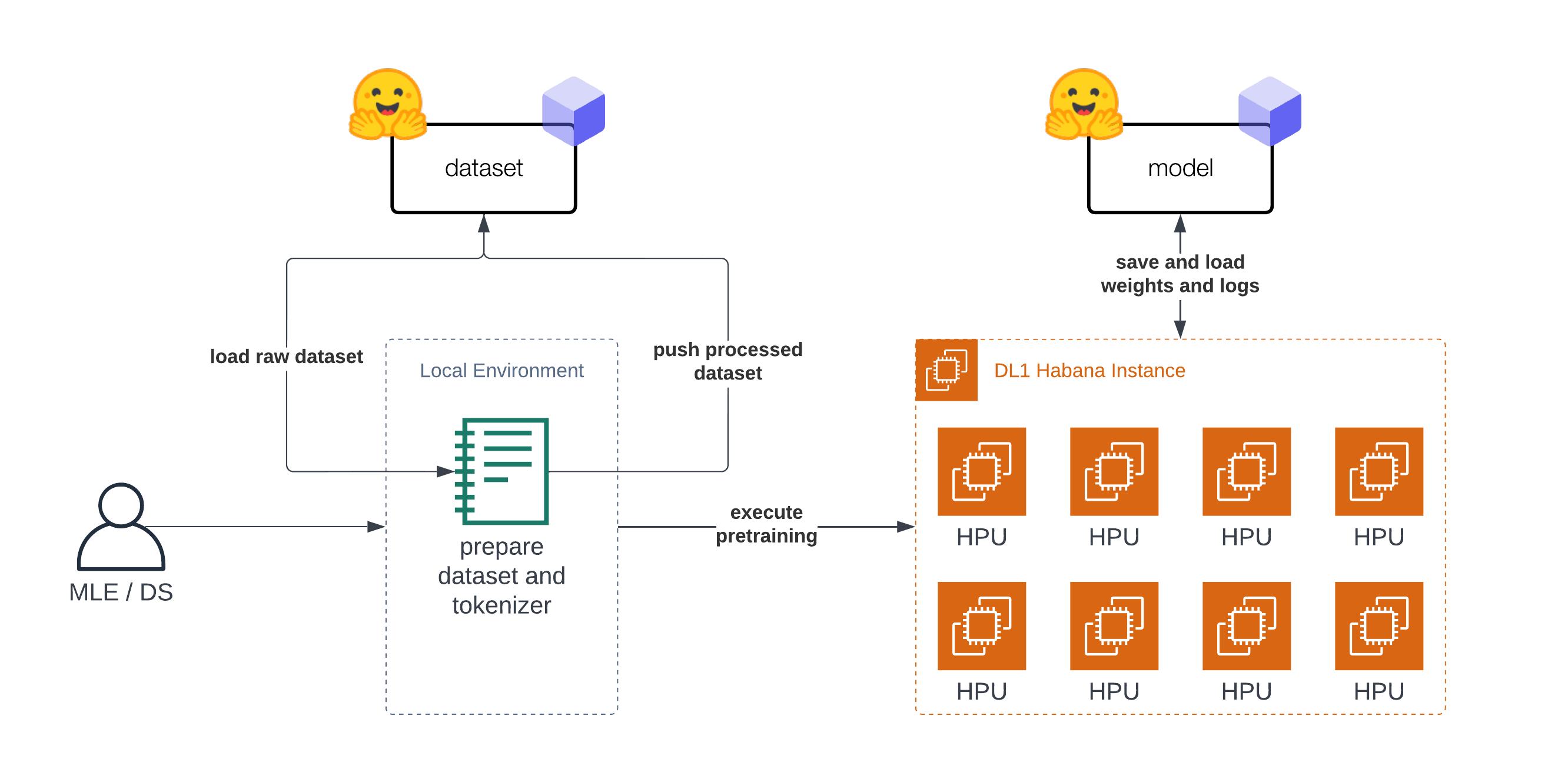

Pre-Training BERT with Hugging Face Transformers and Habana Gaudi